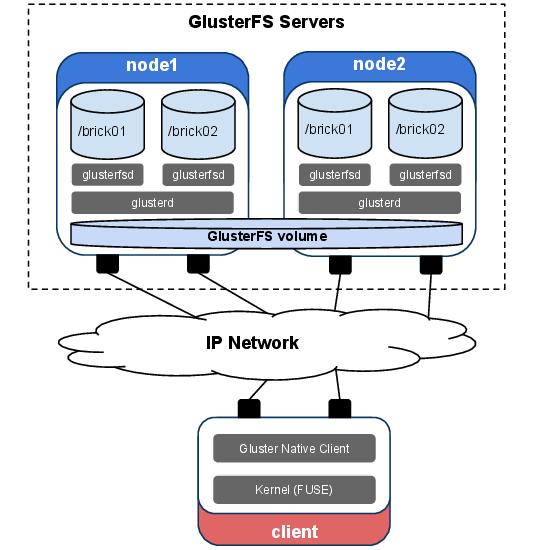

GlusterFS is a distributed file system that can manage disk storage resources from multiple servers into a single global namespace. It is a type of distributed replicated network file-system, fully POSIX compliant and supports storage paradigms such as Block Storage and Object Storage.

GlusterFS stores the data on stable Linux file-systems like ext4, xfs etc. So it doesn’t need an additional metadata server for keeping metadata.

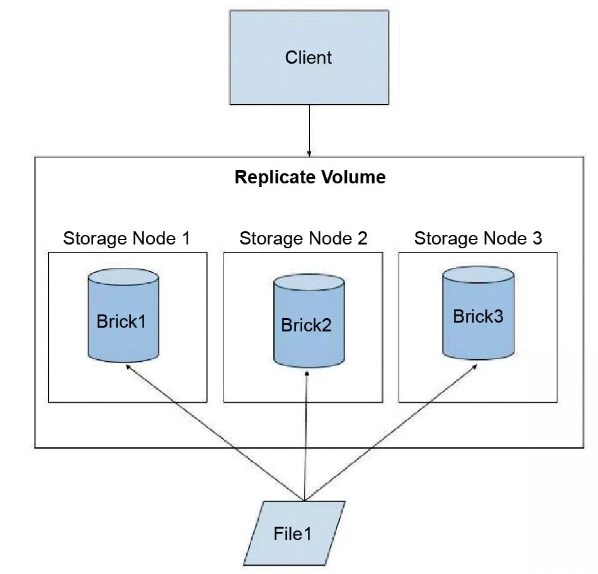

It is possible to build 3 types of volumes: distributed, replicated and striped. I strongly recommend reading GlusterFS architecture documentation from that link. GlusterFS has a well-documented architecture.

Some Advantages from GlusterFS architecture documentation:

- Can use any on disk filesystem that supports extended attributes

- Accessible using industry-standard protocols like NFS and SMB

- Provides replication, quotas, geo-replication, snapshots and bitRot detection

- Open Source

I added some basic terms that we will use throughout this post. Also recommended to check this link for much more detail.

Distributed File System — A file system that allows multiple clients to access data which is spread across cluster peer. The servers allow the client to share and store data just like they are working on locally.

Cluster — a group of peer computer that works together.

Trusted Storage Pool — A storage pool is a trusted network of storage servers.

Fuse — File system in Userspace (FUSE) is a loadable kernel module that lets non-privileged users create files without editing kernel codes. FUSE module only provides a bridge to access to actual kernel interface.

Glusterd — The GlusterFS daemon/service process that needs to be run all members of the trusted storage pool to manage volumes and cluster membership.

Brick — the basic unit of storage in GlusterFS. Represented by an export directory on a server in a trusted storage pool.

Volume — logical collection of bricks.

If you are excited to setup GlusterFS file system and check its’ features, please have a look for more detail about “how to install and configure GlusterFS”.

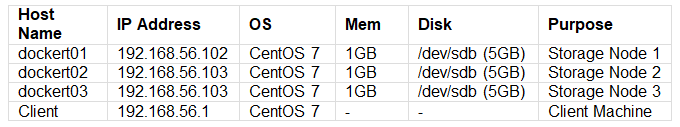

The requirement to install and test GlusterFS:

Configure and Install Binaries

DNS configuration

You should define “hostname” and “ip” information to DNS server or hosts files. I will prefer to add information to “/etc/hosts” file.

#vi /etc/hosts 192.168.56.102 dockert01 192.168.56.103 dockert02 192.168.56.104 dockert03 192.168.56.1 client

Define GlusterFS repository on both client and storage server

Define GlusterFS repositories for all servers in a trusted pool and also for the client. I added the Centos base repository for GlusterFS. You should check GlusterFS repository which belongs to your Linux distribution.

# cat /etc/yum.repos.d/glusterfs.repo [gluster5] name=Gluster 5 baseurl=http://mirror.centos.org/centos/7/storage/x86_64/gluster-5/ gpgcheck=0 enabled=1

c) Install Glusterfs-Server on Storage Nodes

#yum install -y glusterfs-server #systemctl start glusterd #systemctl enable glusterd

Configure firewall rules

I always prefer to disable firewalld daemon on my test platform. But if you have any obligation to enable firewalld , you may define firewall rules for all Server and clients.

Disable firewallD:

#systemctl stop firewalld #systemctl disable firewalld #firewall-cmd –reload

Or define rules:

#firewall-cmd --permanent --zone=public --add-rich-rule='rule family="ipv4" source address="<ipaddress>" accept' #firewall-cmd –reload

Create local file system on storage nodes

Add the disk to storage servers

You need to perform these steps at all trusted storage pool servers. You need to add your storage server to an additional disk. And mount point should be separated from the root file system.

[root@dockert01 ~]# fdisk /dev/sdb

Welcome to fdisk (util-linux 2.23.2).

Changes will remain in memory only, until you decide to write them.

Be careful before using the write command.

Device does not contain a recognized partition table

Building a new DOS disklabel with disk identifier 0x7dbe5c4a.

Command (m for help): n --> New Partition

Partition type:

p primary (0 primary, 0 extended, 4 free)

e extended

Select (default p): p

Partition number (1-4, default 1): 1 --> Partition Number

First sector (2048-16777215, default 2048): -->Press Enter

Using default value 2048

Last sector, +sectors or +size{K,M,G} (2048-16777215, default 16777215):

Using default value 16777215

Partition 1 of type Linux and of size 8 GiB is set

Command (m for help): w --> Write Changes to disk

The partition table has been altered!

Calling ioctl() to re-read partition table.

Syncing disks.

[root@dockert01 ~]# mkfs.xfs /dev/sdb1

meta-data=/dev/sdb1 isize=512 agcount=4, agsize=524224 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=0, sparse=0

data = bsize=4096 blocks=2096896, imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal log bsize=4096 blocks=2560, version=2

= sectsz=512 sunit=0 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

[root@dockert01 ~]# mkdir -p /data/gluster

[root@dockert01 ~]# mount /dev/sdb1 /data/gluster/

[root@dockert01 ~]# df -h /data/gluster

/dev/sdb1 8.0G 33M 8.0G 1% /data/gluster

Add Storage nodes to the trusted storage pool

[root@dockert01 ~]# gluster peer probe dockert02 peer probe: success. [root@dockert01 ~]# gluster peer probe dockert03 peer probe: success. [root@dockert01 ~]# gluster peer status Number of Peers: 2 Hostname: dockert02 Uuid: 182c8b4d-cad1-42a3-a77c-e7654c6110e8 State: Peer in Cluster (Connected) Hostname: dockert03 Uuid: 60ddf4ee-22ea-4c93-bad7-e428c37dc3d5 State: Peer in Cluster (Connected) [root@dockert01 ~]# gluster pool list UUID Hostname State 182c8b4d-cad1-42a3-a77c-e7654c6110e8 dockert02 Connected 60ddf4ee-22ea-4c93-bad7-e428c37dc3d5 dockert03 Connected 07f4232c-5da6-4e0f-9b5d-0f65234f751a localhost Connected

Create GlusterFS volume group

You need to create a brick directory called "gvol0" on all storage nodes.

[root@dockert01 ~]# mkdir -p /data/gluster/gvol0 [root@dockert02 ~]# mkdir -p /data/gluster/gvol0 [root@dockert03 ~]# mkdir -p /data/gluster/gvol0

You may want to check this link to supported architecture on glusterFS. I will perform to setup the process for "Replicated Glusterfs Volume" architecture with 3 replicas. The number of replicas can be defined while create volume.

***Perform this step on one of the nodes in trusted pool.*** [root@dockert01 ~]# gluster volume create gvol0 replica 3 dockert01:/data/gluster/gvol0 dockert02:/data/gluster/gvol0 dockert03:/data/gluster/gvol0 volume create: gvol0: success: please start the volume to access data [root@dockert01 ~]# gluster volume start gvol0 volume start: gvol0: success [root@dockert01 ~]#

Get Information about volume

[root@dockert01 ~]# gluster volume info gvol0 Volume Name: gvol0 Type: Replicate Volume ID: fa97f509-52c9-4243-bdda-a600e549c574 Status: Started Snapshot Count: 0 Number of Bricks: 1 x 3 = 3 Transport-type: tcp Bricks: Brick1: dockert01:/data/gluster/gvol0 Brick2: dockert02:/data/gluster/gvol0 Brick3: dockert03:/data/gluster/gvol0 Options Reconfigured: transport.address-family: inet nfs.disable: on performance.client-io-threads: off

Test GlusterFS file system

You should install glusterFS client package on the client-server which needs to access the file.

[root@client mnt]#yum install glusterfs-client-xlators.x86_64 [root@client mnt]#mount -t glusterfs dockert01:/gvol0 /mnt/glusterfs01/ [root@client mnt]#mount -t glusterfs dockert02:/gvol0 /mnt/glusterfs02/ [root@client mnt]#mount -t glusterfs dockert03:/gvol0 /mnt/glusterfs03/ [root@client mnt]#cd /mnt/ [root@client mnt]# ls -lrt glusterfs* glusterfs: total 0 glusterfs03: → Node03 total 0 -rw-r--r--. 1 root root 0 Dec 25 15:04 file01 -rw-r--r--. 1 root root 0 Dec 25 15:05 file03 -rw-r--r--. 1 root root 0 Dec 25 15:05 file02 glusterfs02: → Node02 total 0 -rw-r--r--. 1 root root 0 Dec 25 15:04 file01 -rw-r--r--. 1 root root 0 Dec 25 15:05 file03 -rw-r--r--. 1 root root 0 Dec 25 15:05 file02 glusterfs01: → Node01 total 0 -rw-r--r--. 1 root root 0 Dec 25 15:04 file01 -rw-r--r--. 1 root root 0 Dec 25 15:05 file03 -rw-r--r--. 1 root root 0 Dec 25 15:05 file02